I’m sorry to say this but the showrunners are not even close to be good enough to sustain this kind of show. I always praise the ambition no matter what, and Westworld has ambition aplenty. I wouldn’t write about it if it didn’t have excellence in it. Yet, it’s a complete let down, at least for the aspects I’m looking for.

We had bad two episodes after the first excellent four, where episode 6 salvaged a little bit even if overall mediocre. Then episode 7 was really quite good and able to salvage a lot more of what came before. So I went into episode 8 with expectations high again… to find the first ten minutes of the show at its worst ever.

The first scene between Ford and Bernard should have the potential to be good, instead it’s rather pointless exposition meant solely for the audience. The dialogue is stilted and even out of character. It seems to want to delve into moral complexity, instead it only devolves into banality. Someone living in a world permeated by artificial consciousness shouldn’t be caught off guard, yet Bernard acts like someone who suddenly finds himself into a sci-fi story. He sits there, for the most part, without even thinking at the implications of what’s going on. Bernard reacts and speaks like a character in any other TV show, regardless of the unique context here.

The writers of Westworld must be aware the current cool thing is to have “gray” characters that are neither completely good or completely evil. So of course now we have two contrived “sides”, one about the board of the park, that has been deliberately presented to be the antagonist, driven by greed and cynical pragmatism to obtain what they want, whatever it takes. But Ford of course can’t be simply “good” either. So they have to turn the character in this control obsessive guy who only thinks in terms of power. Even if it makes no logical sense. The science and plot of this show don’t mix well at all.

It’s a bad scene from beginning to end, but there are at least two particular points that are truly bad. One is that Ford is shown to have this fascination with emotions, and he explains that it was with the help of Bernard that they unlocked the mystery of the “heart”. But there are no actual ideas to back this up. It’s just that, human emotions are human merely because they are realistic, compared to the first hosts that instead were more primitive. For someone like Ford who has unlocked all secrets these displays of human emotion shouldn’t have been interesting at all, they should be boring, since it was all codified, all predictable and all repeated over and over. The other bad part is a little detail, Ford says “I need you to clean up your mess, Bernard.” Excuse me, WHOSE mess? This is again just poor writing used to artificially make Ford into a unlikeable character, because this is the whole point of this scene: make Ford into another cynical villain who’s pushed science too far. By manipulating Bernard and talking the way he does, he’s made into the bad guy who doesn’t have any empathy.

And that underlines where the problem of the show is. It tackles important scientific and philosophical implications, but then it reduces all that into the usual trite TV characterization. Westworld isn’t and cannot be a character driven show, because the totality of TV shows out there are already character driven. They all reuse the same trite formula of putting some character under unprecedented distress in order to highlight the emotions and make who’s watching empathize among all the drama. All the big movers being the selfishness of greed, power, money and various combinations of these, family relationships, conflicting interests and whatnot. Westword is supposed to question deep into the morality, now that science has exposed some unsettling truths. It’s about exploring the implications of all this. And yet we keep backpedaling into trite agendas, where all this moral complexity is lost in the face of yet another struggle for control or power. Westworld explores new territory, yet keeps populating that territory with old characters and trite writing. It wipes clean the slate, only to repopulate it with the worst tropes that plague the industry, multiplying sameness everywhere.

You need new tools to deal with new themes. Westworld proposed new themes but has only old tools to toss at it. It’s clumsy.

Following that bad scene whose only purpose was bad, stilted exposition meant for the spectator, there’s Maeve’s scene, and that’s even worse. For me the breaking point isn’t even the overall context, but the mention of the explosive in her spine that sets off if she tries to leave. This is another unnecessary plot contraption that has no reason to exist. In a world that is almost The Matrix where code is literally the fabric of perceived reality, the idea of an explosive in the spine is blunt and absurd. What exactly would regulate the behavior of that “bomb” if not more code? How can it be logical that if there’s a major fuck up in the scale of an host trying to leave the park then the solution is an hidden bomb? Because the potential of an host leaving the park is way, WAY beyond the scale of what can be fixed by a bomb. Or the bomb triggering because of a mistake. Given the context, it’s the most idiotic and potentially catastrophic idea ever. And to achieve what exactly? The “locality” of the hosts seems to be the smallest of the problems.

Again, this is all written as if the writers didn’t know how to deal with new themes, and so resorted to their usual tools. It’s all baggage due to the facts these writers have no idea how to deal with complex themes, and so they fall back to default gears sprinkled with a slight futuristic context. And once again, even Westworld degenerates into a show that uses science fiction only as decoration, instead of its focus.

But this means that Westword presents new questions, only to produce the same old answers that were innocuous and useless all along. It’s the same shitty writing that is pervasive everywhere. It’s repetition disguised as something new. Trying to have it both ways, and doing poorly regardless.

Of course on the internet they don’t share my own interests, but they certainly didn’t swallow that scene with Maeve anyway. This is a good summary of what everyone noticed. Even worse, EW already criticized how implausible and contrived the scene between Maeve and the two idiots is, and asked about it to the writers themselves:

Nitpicky question though: Couldn’t the body shop guys just jack down Maeve’s levels to knock her out, and make some lobotomizing so-called “mistake” to take out her memory? We’ve been shown over and over the humans have so much control, it’s hard to believe they couldn’t get the upper hand on a rogue host.

Nolan: I will point you toward episode 8.

Beside the fact that’s not nitpicking AT ALL, that’s a huge elephant in the room, but that answer lead everyone to expect they would provide a logic explanation in episode 8, just have patience. So now we do have episode 8, and it’s fucking ridiculous. This isn’t even bad writing, it feels like you watch a scene that belongs to a show, then the following scene seems to come out right from a parody. And it’s not even about the ideas in that scene. It’s not because it doesn’t feel plausible. It’s all of it to be ridiculously awful. It’s very badly written, badly acted and with a very bad screenplay. It’s downright amateurish. And of course it completely breaks the tension when you have a show that tries to be all serious and dramatic and then has a scene taken out of Scrubs.

The problem is much larger, though. Westworld is a castle of cards that tries to pile up lots of complexity but that has zero skill handling them. When it fails not only it’s messy, but it’s even more incompetent than LOST, that also had wild ups and downs, but that was always inspired even in its failures. Westworld is a cool concept without any insight. Backpedaling into proven tropes that still won’t work for anyone here. Trying to wrestle this back into a character driven show when everything else failed is not going to work. People expect you do something interesting with the ideas you scattered on the table. And yet it devolves into corporate backstabbing or AI going evil, that we’ve seen millions of times before, but now in a show that tries to be even more obtuse about it, trying to create unnecessary mysteries everywhere.

That scene between Maeve and the two idiots is exactly what happens when you start with the concept of the “robot revolution” but without its logical causes. The actual context has been built with so much care and detail that in the end there’s actually no space left for old school “AI now runs wild”. We moved past that. The implications are higher. The science the show is based on is much, much more critical and far reaching that a robot out of control. The moral implications more subtle, deeper, unsettling. But again the writers have no tools to explore all this, so we fall back into cartoonish villany.

Maeve had just a moment of enlightenment when she starts wondering what happened to her daughter, but then stops and says “no. Doesn’t matter. It’s all a story.” That’s the point, she questions her own reality. Meaning that reality is redefined. Deeper implications. But then she’s back being obtuse because she follows that line with “It’s all a story created by you to keep me here.” …WHAT? No one cares where Maeve goes. She should know the “story” isn’t created for her, she’s merely a backdrop to entertain human beings who go there. She’s a prop. She’s a cardboard, exactly as she’s written, no matter how maxed her character values. She’s supposed to be super humanly smart, and yet she’s one of the dumbest character in the whole show. Whose poorly explained agenda has become “I’m getting out. I’ll know I’m not a puppet living a lie.” Yep, that’s EXACTLY what some dumb idiot would think. As if by exiting the park she can outrun her own mind.

At every point Westworld fails because it cannot run with what it set up. Maeve has zero introspection, her whole agenda is to stick to the robot revolution she’s written for, even if it makes no sense. As it makes no sense that those two guys should follow every of her commands. This is as terrible as saying “let’s split” in a horror movie. It’s so much beyond believability that it isn’t even good for a laugh.

Deus ex machina is the writing style from scene to scene. Everything happens just because it’s necessary for some rough outline the writers had. The whole thing has lost all plausibility along with all its depth. Without a solid foundation all its mysteries are simply obnoxious failures.

A parenthesis, we now know Bernard was chocking Elsie, because now we have a glimpse of that scene. And with that the show has put itself in the position of being utter crap no matter what path it takes. Incredible. Every hypothesis is shit. The most far fetched is that she comes back as an host. The other more plausible twos is that she’s either dead, or somewhat survived to show up later as a surprise. In all these cases it’s fucking terrible writing all around. If she’s dead it’s terrible because of how contrived was the scene of her going all alone unearthing dangerous mysteries, and if she’s alive it’s terrible because of how artificial and contrived would be not showing the attack. So that when she’s back we won’t have a gasp of shock, but only a groan of exasperation at the most obnoxious and predictable twist ever.

Follows another pointless scene between William and Dolores whose only purpose is more baiting the audience about whether there are two timelines or not. And then a scene between Ford and Charlotte that’s all about implied threats you can find in a million of other shows. And as it happens in a million of other show, it’s written terribly. Both characters know the other knows, yet they won’t speak clearly because otherwise the side plot would be closed there. This sidetrack had nothing relevant to say two episodes ago when it started, now it’s only growing more idiotic and petty. It’s unnecessary bloat added just because someone thought the show needed more conflict.

Then we have a boss fight. Then Charlotte goes to the other most obnoxious character in the show to make use of her chain of command and prepare some retaliation versus Ford. Bloat once again.

There goes half the episode where quality reached the rock bottom. No other episode up to this point was so densely atrocious. From scene to scene there was absolutely nothing to salvage, and I’m surprised of how wildly the quality goes up and down from one episode to the other. But thankfully follows a scene that is quite good, even if not significant. We have a repetition of the shooting scene we’ve seen before, but the music changed, the mood is more playful. The show plays with itself. Maeve is interfering with a pattern we’ve seen before, so she’s gracefully god-like, in the new revealed world where she is in control. It’s essentially sublime because at least here all the premises are solid and the scene is playful while still retaining its meaningfulness. We see what happens when reality is being manipulated, when the fictional drama collapses all around. It’s both character actualization and liberation. It works.

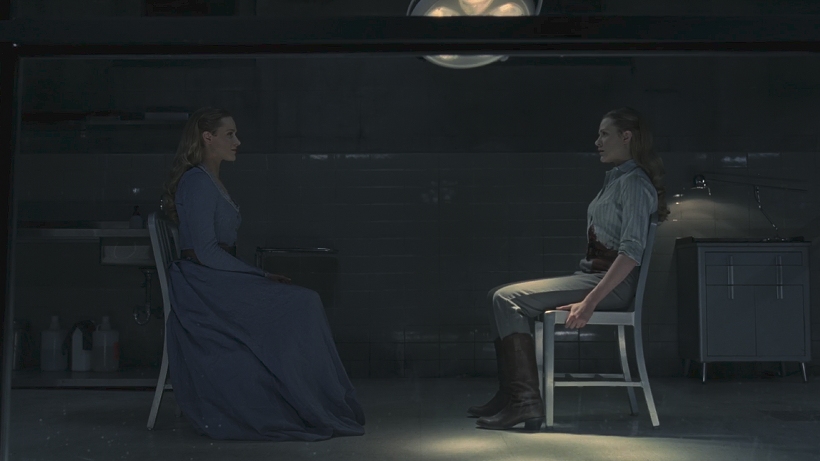

But that’s five great minutes in a bad hour of television. Follows another scene with Bernard and Ford. But at this point neither has anything meaningful to say. The problem is that what they actually say is downright silly.

I understand what I’m made of, how I’m coded,

but I do not understand the things that I feel.

Are they real, the things I experienced?

This is the guy who spent all his life shaping consciousness and reality of the hosts, who now voices the most trite of the doubts.

The first two lines are the “qualia”, some novelty concept for him I’m sure. And the last line is just plain stupid, as the question would rather be about how you define “real”, given what you know, more than answering yes or no to that pointless question.

This happens when you touch the actual dilemma: how would we think if we had solved the problem of consciousness? We have a show where the replication of a human mind is a fact, but this is fictional because we don’t know how, and so when the characters will think about it… they will have no answer.

So they built this show on a premise, but since they don’t actually know how this premise works, the characters themselves are also clueless about what they have done.

At least when Ford speaks he still holds the pretense of slight plausibility, “The self is a kind of fiction, for hosts and humans alike.” Which is at least correct. Meaning that, answering the questions that Bernard just asked, there’s no difference between humans and hosts. And so there’s no difference with “feels” and “reality”. If the “self” has been written away, then all categories have already shifted. It’s all relative to the frame of reference.

The dialogue continues on the right track: “Lifelike, but not alive? So what’s the difference between my pain and yours?” The obvious answer would be “none”. But here the writers need to plug once again their contrived plot against Arnold, so instead of an answer we get a quotation of the usual mystery: “This was the very question that consumed Arnold, filled him with guilt, eventually drove him mad.” Thankfully after the plot plugging we also get an answer from Ford: “The answer always seemed obvious to me. There is no threshold that makes us greater than the sum of our parts, no inflection point at which we become fully alive. We can’t define consciousness because consciousness does not exist.” Hooray, that managed to be all coherent. And it concludes the other small bit of goodness in the episode.

But the scene continues, and Ford degenerates into folk psychology to the point of undermining what he just said before: “Humans fancy that there’s something special about the way we perceive the world, and yet we live in loops as tight and as closed as the hosts do, seldom questioning our choices, content, for the most part, to be told what to do next.” This bit is mostly wrong. We don’t live in loops, we very, very often question our choices, and there’s no one who tells us what to do next. He goes from discussing things literally, to metaphorically, as if the same vocabulary could apply when you completely switch the context. A scientist wouldn’t talk like that, because that’s wildly imprecise. And you cannot answer a literal question in a metaphoric way. That’s pure bullshit.

Of course the writers of the show don’t have the literal answer, and so we get the metaphorical one. It could have been fine, if it wasn’t logical that Ford actually had the literal answer too, given the context. So coherence is shattered again.

When Ford says “there is no threshold that makes us greater than the sum of our parts” he touches on the Ship of Theseus philosophical problem, so it touches exactly the core of the theme of the dialogue. But then he’s sidetracked into metaphor. The literal answer would have dealt with the nature of language. What’s “human”? Exactly what you want, since language is based on an agreement. You can define “human” exactly as it’s useful. It’s a word. It means whatever you want, as long we can agree so that we can understand each other.

“I’m so sorry, Bernard. Of course you never studied any cybernetics. You’re only a dumb character in a TV show, after all.”

Follows another pointless scene between Dolores and William, just repeating the same stuff about dream, reality and figuring out if it’s the past or the future until the writers decide to stop being obnoxious about it. Then more stupid plotting between Charlotte and the writer guy, who randomly bump into Dolores father, because of course convenient coincidences are fun, cueing future plot twists. And then Bernard and the security guy to conveniently implant some implausible hole into Ford’s plan, because of course you can’t let Ford win in the end. Ford is so omniscient and omnipotent… only when he’s not because the plot requires otherwise, so he has to make his own bad move too to offer the premise for his defeat.

Then MiB explains his own story, but he doesn’t really explain anything anyway. He says a whole lot of nothing, to conclude with “I’m a good guy… Until I’m not.” Apparently his wife and daughter are “terrified” even if there’s no motivation. The whole dialogue follows a logic that makes sense only in the mind of who wrote it:

– She killed herself because of me.

– Did you hurt them, too?

– Never.

Apparently his wife killed herself because “she knew anyway”. Knew what? Whatever. Wife and daughter were somehow able to gaze into MiB’s soul and know he was a real villain deep inside. How? Because that’s just convenient for the plot, of course.

This is how the show knows to be dumb about the things it just stated. We move from the Ship of Theseus problem, that shows how there are no real thresholds, no convenient lines to cross. The ship IS nothing more than its parts. The “ship” is just a term we use to categorize those parts, so we are the ones to decide where to draw the line. We are the ones to decide when to call a ship a ship. There’s nothing more to it, no special quality, what is inside is outside, Ford confirmed as much. But here MiB contradicts all that. What is inside is the contrary of what’s outside. He says that a good man is not the one who proves to be a good man with his actions for all his life. Nope, a good man is the one his daughter calls good man after having scanned him with her supernatural insight that is able to gaze right into a man’s soul. We are into pure unreality. We moved from science fiction to baseless, retarded mysticism.

You are a bad man because I said so after having scanned your soul with my super sight. Prove it false if you can!

What’s a good guy, then? Isn’t it obvious? A good guy is one who rapes and kills, but deep down he has a good soul. The show says.

If deep in your heart you think you’re good (or your unbiased daughter or wife say so), then you’re a good guy. Your actions don’t matter.

That’s how Westworld tries to deal with its deep moral dilemmas.

The scene then mixes with Maeve’s and degenerates into more crap. “I had never seen anything like it. She was alive, truly alive, if only for a moment.” In a show about questioning reality you wonder why questioning words is too much. What’s means being “alive”? How MiB is able to identify the difference? What’s actually the difference? The way of walking? A particular wrinkly expression of the face? Anguish? How’s Maeve dying there any different from hosts dying everywhere else?

The show tries to state all this as if it’s factual, even if it makes no sense.

“Arnold’s game.”

Apparently “Arnold” is the keyword used for “deus ex machina”. But not meant intelligently or metaliguistically. It’s just used every time the plot doesn’t make any fucking sense: Arnold did it.

Then it seems the episode was moving toward something. Maeve’s scene links with a flashback. Maybe that Barnard was actually Arnold and we could have seen Maeve killing him, at least eliminating another mystery. But nope. The whole finale of the episode flops into irrelevance. Maeve stabs herself, achieving nothing at all. In the present she’s taken away, so nothing is revealed there either, she just seems to have acted erratically the whole episode. And the epilogue with the MiB provides even more McGuffin without any consideration: “The maze is all that matters now, and besting Wyatt is the last step in unlocking it.”

Of course, if you say so.

When the big cliffhanger leading to the very last two episodes is such a stupid McGuffin you know the show is gone to shit.